Is it possible to seamlessly blend real-life products into AI-generated scenes? How much control can we achieve over visual consistency and creative expression?

At Octagram, we’re always trying to understand how we can integrate generative AI into our workflow and we are actively experimenting with a hybrid approach for digital asset creation and product visualizations.

Why generative AI?

Developments in generative AI are accelerating and will forever change the creative process. Just as Photoshop and 3D software have opened new horizons for creativity, so will generative AI.

AI capabilities already are disrupting virtually every industry sector and although still in its infancy, with ChatGPT only being released in 2022, generative AI has already demonstrated its potential through various tools and applications.

This technological wave represents a productivity revolution and though in its infancy, we think we should not ignore it. for The pace of AI development raises the question: what capabilities might this technology enable over the next five years?

At Octagram, we choose to experiment with AI while acknowledging that its uses and applications need further regulation. We want to understand its capabilities in its early stages so we’ll be ready to devise innovative use cases once generative AI matures.

Our experiment

We set out to explore several aspects of AI-generated imagery, focusing on a real life product (headsets) as our central theme.

The experiment aimed to:

- Generate hero shots of headsets, showcasing the product in isolation. We wanted to see how AI could handle product visualisation for e-commerce beauty shots.

- Create lifestyle images featuring fictional characters/models wearing the headsets. This allowed us to explore how AI could blend products into realistic human use contexts.

- Achieve character/model consistency while altering camera angles, backgrounds, and facial expressions. We were curious about AI’s ability to maintain a consistent look for a character across various scenes and moods.

- While not covered in detail in this article, we also looked into the possibility of replacing generic headsets with real-life branded products in AI-generated images.

Through this process we aimed to understand how the use of generative AI could replace part of the conventional process of producing imagery for e-commerce. One scenario is that only product studio photo shoots would be needed, while the beauty shots and lifestyle imagery featuring the product could be produced with the help of generative AI, leading to considerable budget savings.

Our approach

We started by asking the AI model to generate headset designs. This gave us a range of options to work with. From there, we moved on to creating images of people/models wearing the headsets. We played around with different angles and backgrounds, trying to achieve a consistent look for our characters across various scenes.

Our first attempts looked a bit stiff. We realized music is about feeling good, so we aimed for happier expressions.

It took some back-and-forth, but we eventually captured the right emotions.

For future iterations we focused on keeping models looking consistent, making them visually appealing, and capturing the right emotions. It was a balancing act, but we got closer to our vision with each iteration.

Behind the scenes

Our process uses Stable Diffusion in Krita to generate initial images. We then refined these using custom workflows in comfyUI or Krita, depending on the specific needs of each image. For character consistency, we focused on maintaining key features while allowing for variations in pose and expression. This often involved multiple iterations, tweaking prompts, and settings to achieve the desired results.

When working on compositions, we used a batch generation approach, creating multiple versions of each scene. This allowed us to curate the best outcomes and iterate further by adding, moving, or removing elements as needed. The process was time-intensive but crucial for achieving high-quality results.

Placing branded products in AI generated Images?

It is possible to render real products in AI-generated imagery. In order to accurately represent a product using generative AI we need to start with sharp, high-resolution images of the actual product (ideally professionally shot in the studio). These serve as training sets of images for the AI to generate to accurately render the product.

If the product is not perfectly rendered, additional interventions on the image using Photoshop will be needed.

The key challenge is seamless integration of real images in the virtual environment. This involves paying attention to lighting sources, ensuring the product’s shadows and highlights matched the generated environment. Color balance is another critical factor. We had to adjust the product’s colors to fit naturally within the AI generated scene.

As a final step, we used upscaling techniques to add refinement and detail, particularly to the product itself. This helped maintain the crisp, realistic look of the actual headset within the AI-generated context.

While this process requires additional fine-tuning, it proves that a hybrid image generation approach could lead to significant savings in producing imagery for e-commerce.

What we learned

This project revealed both the potential and limitations of AI in design and marketing. We found that AI excels at generating diverse concepts quickly, which can significantly speed up the ideation phase. However, achieving precise, brand-specific results often requires extensive fine-tuning and human guidance.

One key insight was the importance of prompt engineering. The way we framed our requests to the AI dramatically affected the output. We learned to be more specific in our prompts, describing not just the object but the style, mood, and context we wanted.

We also discovered that while AI can create impressive images, it sometimes struggles with complex details like hands or specific text of logos. This reminded us of the continued importance of human oversight and post-processing in the creative workflow.

The future of generative AI

We’re just scratching the surface of what’s possible with generative AI. As we keep exploring, we’ll share what we learn. The future of creativity is evolving, and we’re excited to be part of that journey.

Octagram is committed to integrate new technologies and optimize digital asset production workflows through a symbiosis of human creativity and artificial intelligence augmentation.

However, we are aware that widespread AI adoption necessitates a rigorous examination of the ethical implications, legal factors, and inherent biases or inaccuracies potentially introduced through training data. Nonetheless, the creative opportunities enabled by this technology are sufficiently compelling to dedicate time for further exploration.

Related Posts

June 25, 2025

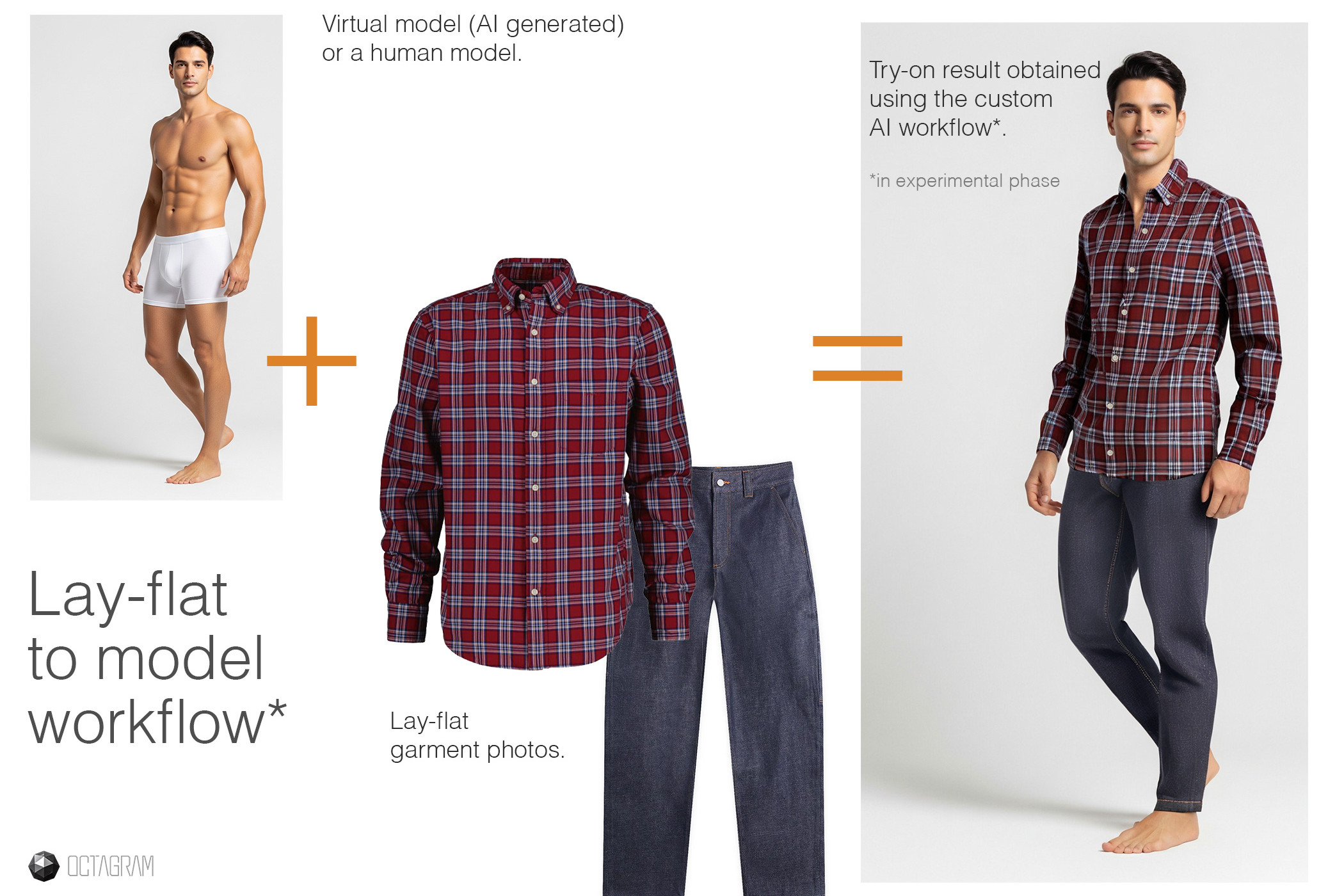

“Lay-flat to model” generative A.I. workflow – asset production for e-commerce

A "lay-flat to model" custom AI workflow aimed at reducing costs and the time…

March 8, 2024

Exploring E-commerce Asset Production with Generative AI

We push the boundaries of e-commerce asset production with Generative AI,…

April 25, 2023

Generative AI – overcoming randomness in Midjourney

Recent A.I. developments have had a significant impact on the creative and…